Asking AI gods: What does "Rational Sheep" mean?

Oh my, it's pretty obvious that some Big Tech czars don't want to "get" religion

Remember that old cliche, “garbage in, garbage out”?

If not, here is a quick reminder, care of (#DUH) the AI Overview function built into the basic Google search engine:

"Garbage in, garbage out" (GIGO) is a concept that states that the quality of a system's output is determined by the quality of its input. This means that if a system is given low-quality or incorrect data, it will produce low-quality or incorrect results.

Ah, but was there “garbage” in the information that shaped that AI answer?

That’s a joke. Maybe.

Let me explain. This weekend, I wrote a think piece (“‘Good’ religion vs. ‘bad’ religion — in mass media and life”) that opened with a wave of thoughts and information about the February 2 decision, a year ago, to shutter GetReligion.org after 20 years of work dissecting news coverage of religion. The website remains online as a massive, searchable, research archive.

At the end of that post (which I sent to free-content subscribers), I asked if the “good” religion (progressive) vs. “bad” religion (orthodox) dynamic that the GetReligionistas detected in mainstream journalism can be seen in Hollywood and Big Tech.

When I say “Big Tech,” I am talking about the companies that build the AI programs that shape the world of social media, search engines and, increasingly, text-generating websites. We will leave armies of robots, flocks of scary drones and self-driving vehicles out of the discussion, at this point.

Ask teachers — at any level of education — if the AI revolution is shaping “work” submitted by students. Ask the professionals who manage news websites if the bean-counter chatter is getting louder about using AI as a way to trim the cost of “some” content production (maybe replacing old-school reporting and writing).

Asking some new questions about “good” religion vs. “bad” religion tensions made me think about AI. What would happen if the AI gods programmed inaccurate or biased information about religion into their digital machines? What if — when scanning “libraries” in the online world — they simply avoided religious issues and information altogether?

To probe this, I decided to ask the online gods this self-serving question: “What is Rational Sheep?” I also asked, “What does Rational Sheep mean?”, but that didn’t change the results.

Let’s start with the elephant in the classroom. ChatGPT offered this:

Rational Sheep is a term that is often used in discussions of decision-making, particularly in contexts related to economics, psychology, and social behavior. It refers to individuals who, despite potentially having access to the information needed to make informed or "rational" decisions, follow the majority or popular opinion without critically evaluating it.

The term plays on the contrast between "rational" (a reasoned, thoughtful decision-making process) and "sheep" (following the crowd without independent thought). It's like when people go along with a trend or belief just because others are doing it, rather than questioning or analyzing the decision for themselves. This can happen in a lot of areas, from consumer behavior to politics.

Some scholars or thinkers use it to criticize herd behavior in markets or societies, where people may act in ways that are not in their best interest or may be suboptimal because they’re simply mimicking the choices of others.

Really now?

I have to admit that I have never seen “Rational Sheep” used that way. Is it possible that “economics,” “psychology,” “social behavior” and “politics” pretty much define the subjects that the ChatGPT programmers considered “real”?

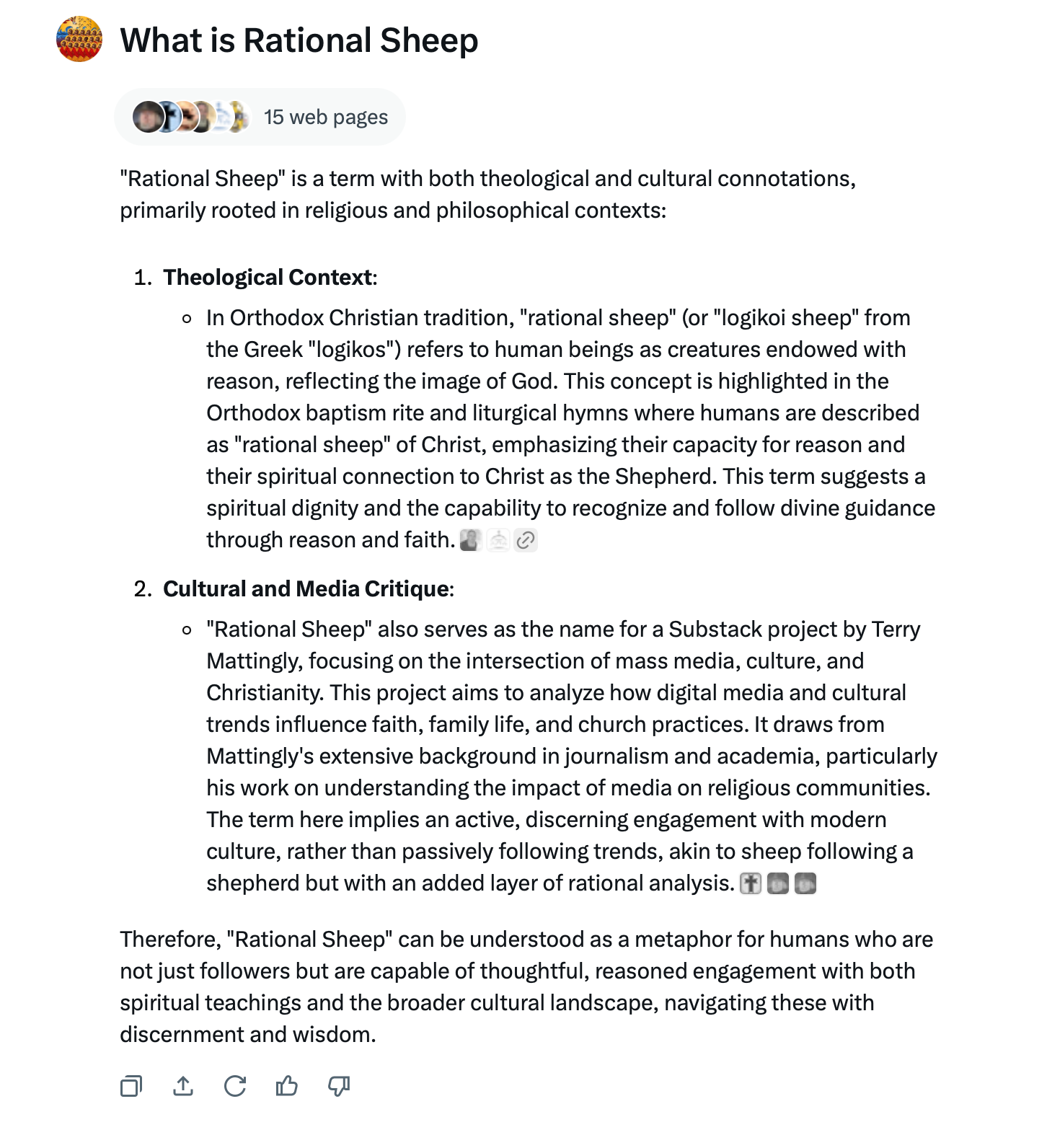

OK, what happens when you do a basic Google search on this subject? That yielded a very different set of results that looks like this (in a screen grab).

What about Grok, the AI machine built into the X universe that is, ultimately, managed by programmers hired by Elon Musk and his associates?

The first time I asked my question to Grok, I got this answer:

The second time I asked Grok that question it went straight to the Rational Sheep Substack project and stayed there. I would argue that opening its AI response with the theological background material was the better option.

It would appear that the creators of Grok allowed their AI program to consult websites containing information about religion and faith. OK, maybe it helps that I post Rational Sheep material on X.

At this point, let me stress that I am not an expert in AI text generating. However, I was able to find some other AI options that I could test, without creating a personal account (I am rather cynical about these things) that could lead to waves of spam emails linked to credit cards.

However, the Deep AI website led me back into religion-free ChatGPT territory, with this short response:

I couldn't find any significant information available on the term "Rational Sheep." It is possible that Rational Sheep is a term from a lesser-known context or publication, or it could be a misspelling of a more commonly known term. However, I found that 'Rational Sheep' is sometimes mentioned in discussions related to psychology, philosophy and economics, but without more context, it's difficult to pinpoint the specific concept or theory associated with this term.

Ouch! It’s all about the word “significant,” isn’t it? What about centuries of theological discourse, which can be found online? OK, and what about this Substack project, which contains almost a year of content?

Let’s try a few more. The TinyWow website appears to have gone straight to the information sources used by, wait for it, ChatGPT:

The term "Rational Sheep" refers to individuals who exhibit both rationality and a tendency to follow the crowd. In other words, they are individuals who are capable of critical thinking and making informed decisions, but who also tend to conform to societal norms and expectations. This term can be seen as an oxymoron, as rationality and conformity may seem contradictory. However, it highlights the complexity of human behavior and the balance that individuals must strike between independent thinking and social integration.

Being a Rational Sheep involves being able to think critically and make reasoned decisions based on evidence and logic. These individuals are not swayed by emotions or biases, but instead rely on rationality and reasoning to guide their actions. At the same time, they are aware of the importance of social norms and expectations, and are willing to conform to these in order to maintain harmony within their social groups. This ability to navigate between independent thinking and social conformity is what sets Rational Sheep apart from others who may be entirely swayed by emotions or blindly follow the crowd.

Although being a Rational Sheep may seem like a paradox, it is actually a valuable skill in today's society. By combining critical thinking with social awareness, individuals can strike a balance between independence and integration, and make informed decisions that benefit both themselves and their communities. Being a Rational Sheep requires a level of self-awareness and introspection that allows individuals to assess their own beliefs and behaviors, while also considering the perspectives of others. Ultimately, the term "Rational Sheep" challenges us to think more deeply about the complexities of human nature and the ways in which we navigate the world around us.

I also asked my question to the Typli text generator and, in response, received a 550-word take on the religion-free ChatGPT file. It ended with this basic statement of worldview:

The term "Rational Sheep" serves as a reminder of the delicate balance between independent thought and social influence. While it is natural to seek validation from others, it is essential to cultivate critical thinking skills and question the status quo. By understanding the dynamics of Rational Sheep behavior, individuals can make more informed decisions that align with their values and beliefs.

In a world where information is abundant and opinions are diverse, striving for rationality amidst the noise of conformity is crucial. Embracing our individuality while remaining open to the insights of others can lead to a more balanced and thoughtful approach to decision-making.

That last sentence? Well, practice what you preach.

In conclusion, I think that it’s rather obvious that what we are seeing transcends the old “garbage in, garbage out” equation.

In a way, we are going to find out the parts of life that clerics in the Big Tech college of cardinals consider “real” and those that they consider, well, “not real,” or maybe “pretend.”

In conclusion, consider this Bernard Marr list of the Top 10 limitations that experts are already seeing with ChatGPT and AI in general. Yes, I added some Rational Sheep friendly bold-italics type to his list:

1. It has limitations understanding context, including sarcasm and humor.

2. It lacks common sense and background knowledge.

3. It contains bias and prejudice due to training data.

4. The model has difficulty generating long-form, structured content.

5. It can’t really handle multiple tasks or objectives.

6. It has limitations in handling sensitive or private information.

7. It’s sensitivity to typos, grammatical errors and misspellings is limited.

8. It has difficulty in ensuring the accuracy of information.

9. There is often a need for fine-tuning the model for specific use cases.

10. There are limitations in terms of computational cost and power required to run the model.

For millions of people (maybe billions), the online world is now the “real” world in terms of the information that shapes their work. Think about that.

In the real world, statistically speaking, religious traditions and beliefs play a major role in the actions of the majority of “real” people around the world. Do the gods of AI know that or are they choosing to ignore that fact?

Just asking.

Readers! What are you seeing in your early encounters with ChatGPT and other doorways into the AI world?

Remember the 60 Minutes episode where AI produced an impressive document with a list of sources? The only problem is that it had completely fabricated the references.

https://www.facebook.com/60minutes/videos/googles-chatbot-failed-60-minutes-fact-check/2198479880340248/

I'm definitely not planning on looking to AI for religious...anything.

I asked CoPilot if it was true that the US had negotiated with Russia to limit NATO expansion in order for the wall to come down. Copilot said ‘yes’ and gave a good historical synopsis.

I then asked if the US had violated that agreement regarding Russia and Ukraine. Copilot said not exactly, because political negotiations are always nuanced. Good negotiators leave enough legal nuance in their agreements to allow for changes in conditions.

I then asked if the ultimate goal was NATO expansion all along, wasn’t the US deceptive? It again gave the reason above.

Next I asked that in the context of the westward expansion of the United States, did the US Gov’t use the ‘negotiation techniques’ to break Treaties with Native American Tribes in order to reach its ultimate goal of manifest destiny. Copilot said that the US Gov’t did break treaties and appeared deceptive, essentially speaking with a forked tongue.

I then asked why, in the case of Ukraine, hiding one’s ultimate goal of NATO expansion in negotiations was acceptable, but that same technique in negotiations with Native Americans was wrong. The answer was political landscapes change and good negotiators allow for nuance to adapt to the change. There was zero emphasis about the importance of honesty and integrity in negotiations.

AI does not have a moral compass!